Destinations

Overview

By configuring a file destination, a schema-enforced file of the submission will be uploaded to the destination. This enables easy integration with other systems, which has a guarantee of the file’s schema. Depending on the destination type (e.g. AWS S3), a an automatic handling trigger can be setup on the receiving end.

Output file

The output file will be of type Parquet:

- Description: Columnar storage format optimized for analytics

- Use Cases: Data warehousing, analytics, big data processing

- Benefits: High compression, fast query performance

Storage Destinations

FussyData supports multiple cloud storage providers and protocols for delivering your processed data. Each destination can be configured with specific settings to meet your requirements.

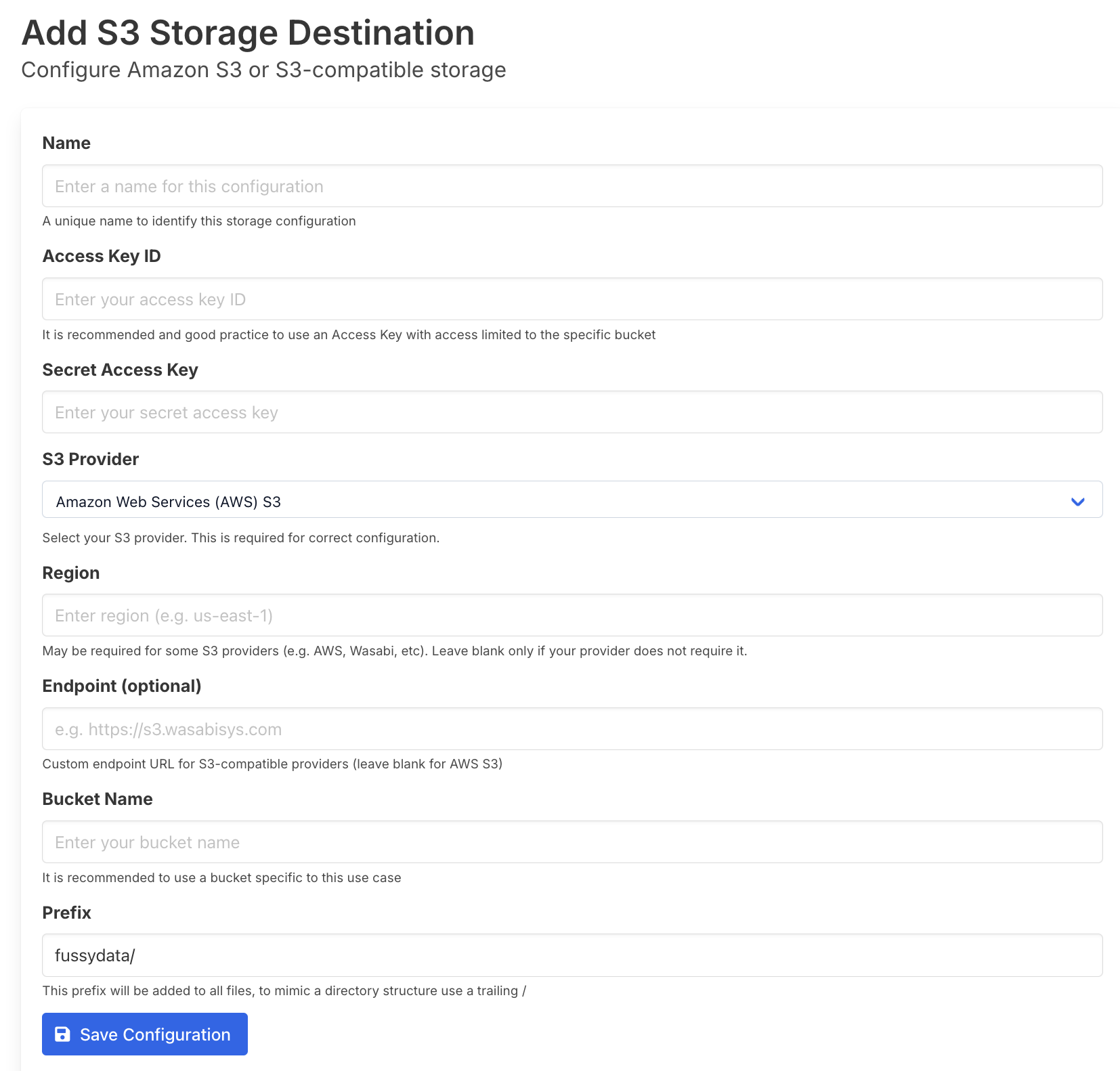

Amazon S3 Compatible Storage

Configure Amazon S3 or S3-compatible storage for your data exports.

Configuration Fields

- Name: A unique name to identify this storage configuration

- Access Key ID: It is recommended and good practice to use an Access Key with access limited to the specific bucket

- Secret Access Key: The secret key corresponding to your Access Key ID

- S3 Provider: Select your S3 provider. This is required for correct configuration.

- Region: May be required for some S3 providers (e.g. AWS, Wasabi, etc). Leave blank only if your provider does not require it.

- Endpoint (optional): Custom endpoint URL for S3-compatible providers (leave blank for AWS S3)

- Bucket Name: It is recommended to use a bucket specific to this use case

- Prefix: This prefix will be added to all files, to mimic a directory structure use a trailing /

Supported S3 Providers

- Amazon Web Services (AWS) S3

- Wasabi

- DigitalOcean Spaces

- MinIO

- Other S3-compatible services

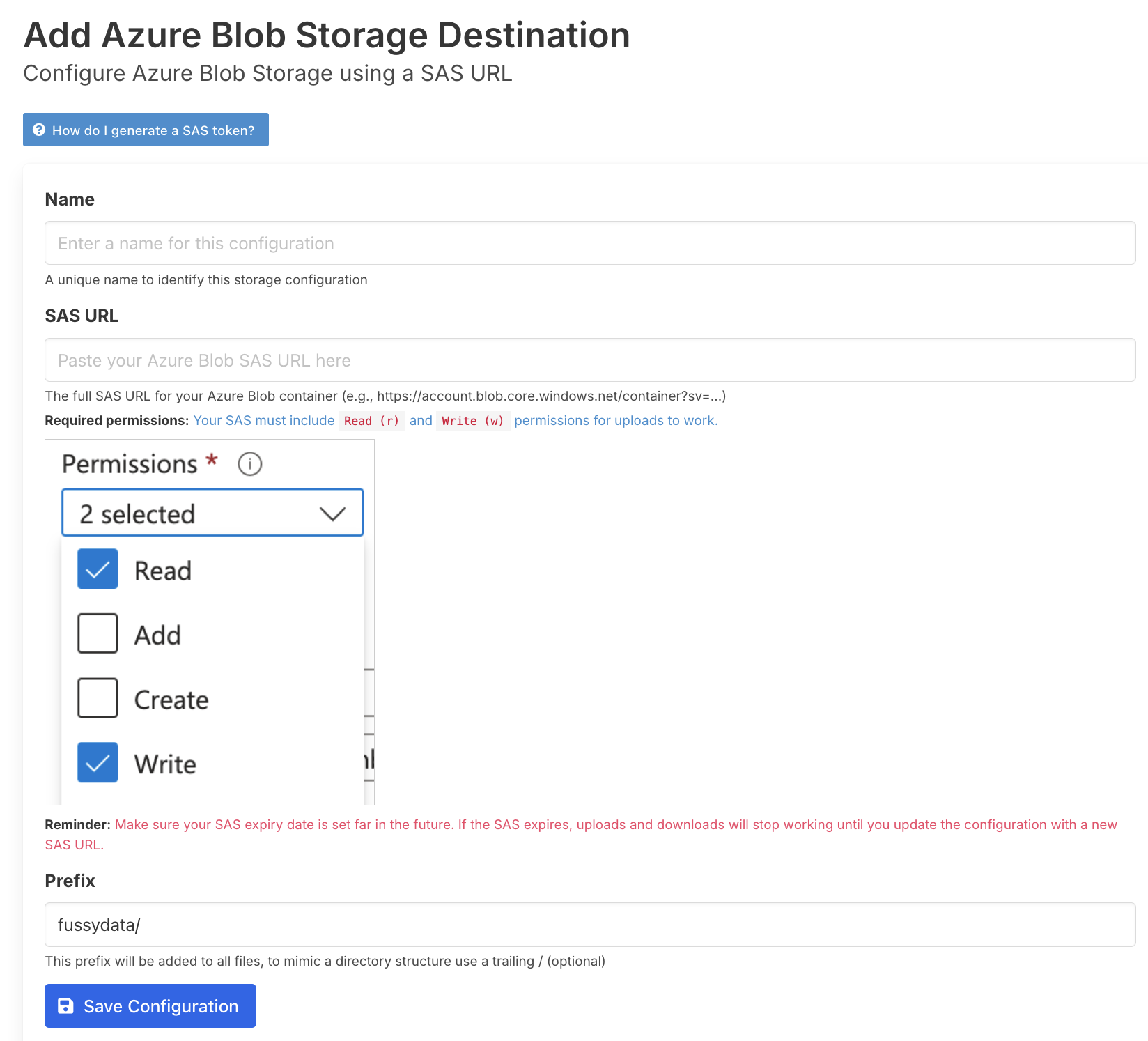

Azure Blob Storage

Configure Azure Blob Storage using a SAS URL for secure access to your containers.

Configuration Fields

- Name: A unique name to identify this storage configuration

- SAS URL: The full SAS URL for your Azure Blob container (e.g., https://account.blob.core.windows.net/container?sv=…)

- Required permissions: Your SAS must include Read (r) and Write (w) permissions for uploads to work.

- Important: Make sure your SAS expiry date is set far in the future. If the SAS expires, uploads and downloads will stop working until you update the configuration with a new SAS URL.

- Prefix: This prefix will be added to all files, to mimic a directory structure use a trailing / (optional)

SAS URL Requirements

Your SAS URL must include the following permissions:

- Read (r): Required for downloading files

- Write (w): Required for uploading processed data

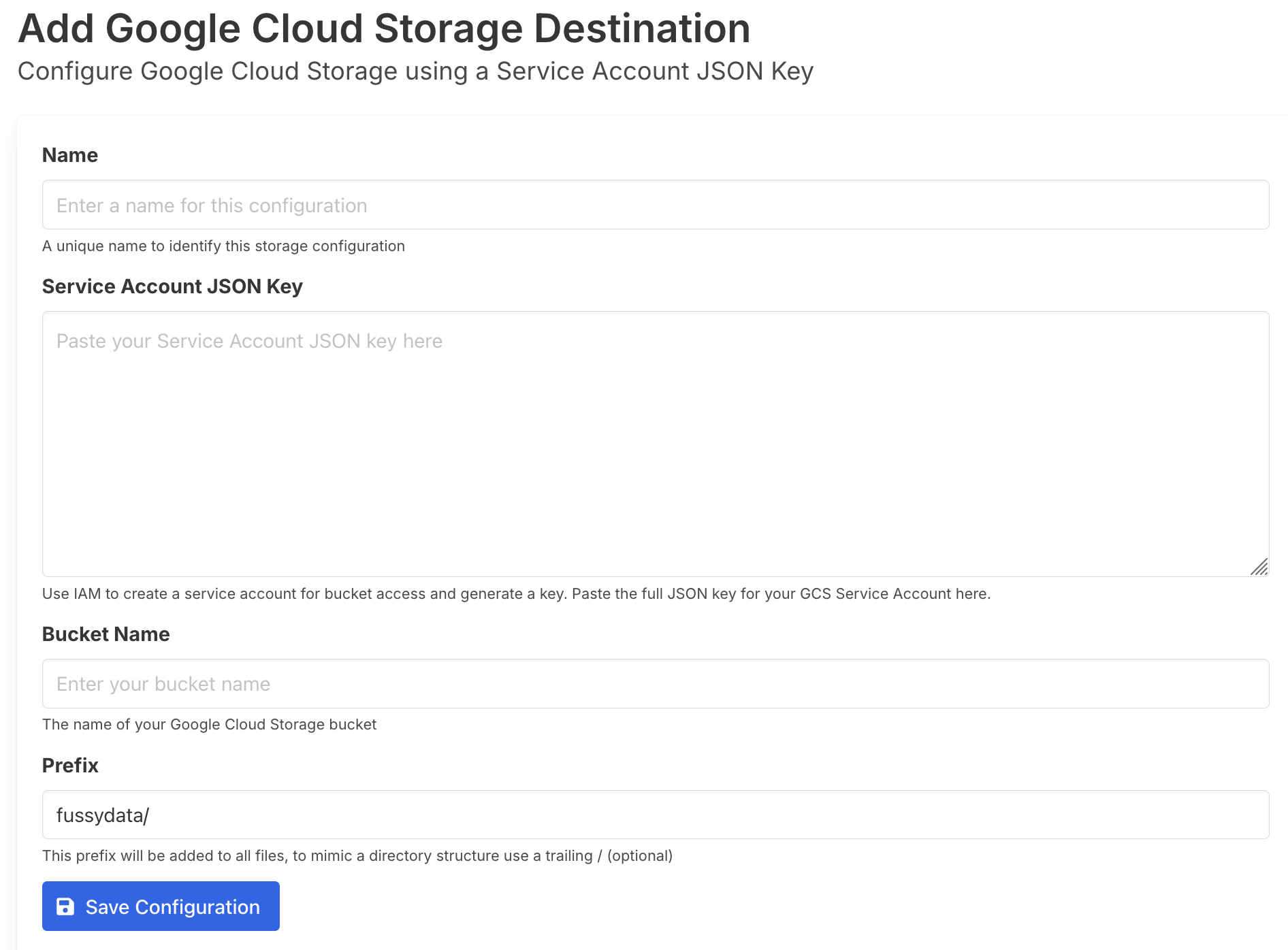

Google Cloud Storage

Configure Google Cloud Storage using a Service Account JSON Key for secure authentication.

Configuration Fields

- Name: A unique name to identify this storage configuration

- Service Account JSON Key: Use IAM to create a service account for bucket access and generate a key. Paste the full JSON key for your GCS Service Account here.

- Bucket Name: The name of your Google Cloud Storage bucket

- Prefix: This prefix will be added to all files, to mimic a directory structure use a trailing / (optional)

Service Account Setup

- Go to Google Cloud Console → IAM & Admin → Service Accounts

- Create a new service account or select an existing one

- Grant the service account Storage Object Admin role for your bucket

- Generate a JSON key and paste it into the configuration

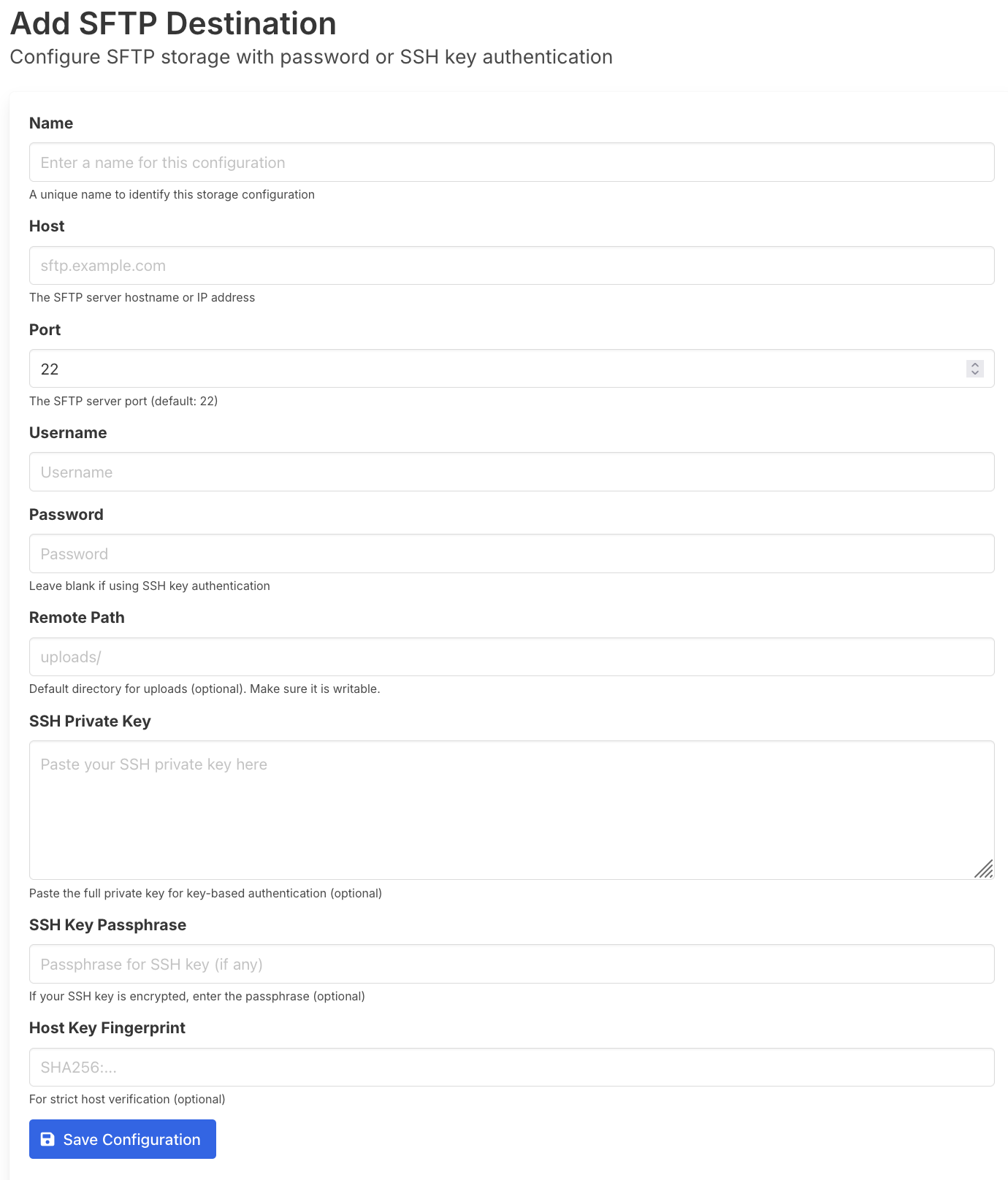

SFTP Storage

Configure SFTP storage with password or SSH key authentication for secure file transfers.

Configuration Fields

- Name: A unique name to identify this storage configuration

- Host: The SFTP server hostname or IP address

- Port: The SFTP server port (default: 22)

- Username: Your SFTP username

- Password: Leave blank if using SSH key authentication

- Remote Path: Default directory for uploads (optional). Make sure it is writable.

- SSH Private Key: Paste the full private key for key-based authentication (optional)

- SSH Key Passphrase: If your SSH key is encrypted, enter the passphrase (optional)

- Host Key Fingerprint: For strict host verification (optional)

Authentication Methods

- Password Authentication: Provide username and password

- SSH Key Authentication: Provide username and SSH private key (recommended for better security)

Linking Destinations to Requests

Once you have configured your storage destination, you need to link it to a submission request to enable automatic file uploads.

Steps to Link a Destination

- Go to “Submission Requests”, and click “Details” for a Submission Request.

- Click “Edit File Request” and add your new destination under the destinations section.

The linked destination will automatically receive the processed Parquet file when the submission is completed, ensuring your data is delivered to your chosen storage location with the guaranteed schema enforcement.